Book Recommendation System Using Content-Based Filtering and Cosine Similarity

Welcome, I made this a while back project. The technique used to make this project was cosine similarity.

What is a Content-Based Filtering System?

A Content-Based Filtering System is a recommendation technique that suggests items to users based on the characteristics or "content" of the items themselves. For books, this can include factors like their description, author, genre, or even keywords.

In this project, we focus solely on the description of books to demonstrate how such a system works.

How It Works

Let’s look at an example:

Books:

-

The Art of Baking Bread

A comprehensive guide on how to bake various types of bread at home. -

The Art of Making Pasta

A comprehensive guide on how to make different types of pasta from scratch. -

The Secrets of Astrophysics

Explore the fascinating world of astrophysics, uncovering the mysteries of the universe and its celestial objects.

If you compare the descriptions, you'll notice that Book 1 and Book 2 are similar because they share common keywords like “comprehensive”, “guide”, and “types”. On the other hand, Book 3 doesn’t share these words, making it distinct.

The system analyzes these similarities to recommend books with related content.

Another Example

Consider these books:

-

The Hunger Games

A thrilling adventure of a young girl who fights for survival in a cruel and oppressive society. -

Divergent

In a divided society, a young girl discovers her unique abilities and fights against a corrupt system. -

The Hitchhiker's Guide to the Galaxy

A comedic science fiction classic following the misadventures of a human and his alien friend as they travel the universe.

Here, The Hunger Games and Divergent share themes of oppression, survival, and fighting against a system, making them similar. However, The Hitchhiker's Guide to the Galaxy is unrelated, focusing on humor and space adventures.

How Do We Measure Similarity?

To determine the similarity between two items, we use Cosine Similarity—a mathematical technique that compares text content based on the angle between their word frequency vectors.

Let’s break it down:

1. Start with Sentences

For example:

- Sentence 1: "I like cats."

- Sentence 2: "I love dogs."

- Sentence 3: "Cats and dogs are cute."

2. Build a Word Set

From the sentences, create a set of all unique words: - Word set: {I, like, cats, love, dogs, and, are, cute}

3. Count Word Frequencies

Now, count how often each word appears in each sentence:

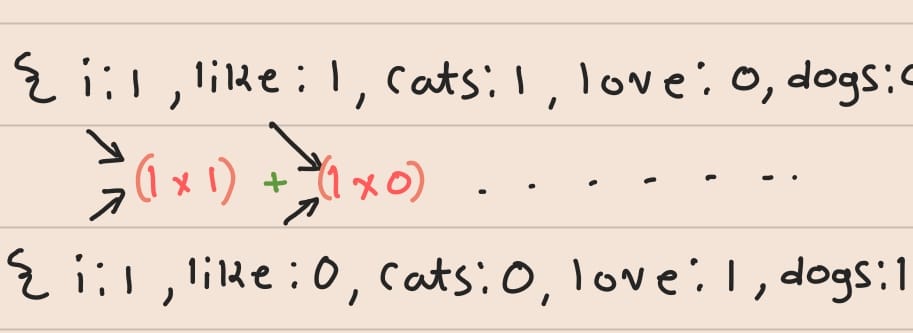

- Sentence 1: {I: 1, like: 1, cats: 1, love: 0, dogs: 0, and: 0, are: 0, cute: 0}

- Sentence 2: {I: 1, like: 0, cats: 0, love: 1, dogs: 1, and: 0, are: 0, cute: 0}

- Sentence 3: {I: 0, like: 0, cats: 1, love: 0, dogs: 1, and: 1, are: 1, cute: 1}

4. Calculate Cosine Similarity

To compare two sentences, calculate their

- Dot Product: Multiply corresponding word frequencies and sum them up.

- Magnitude: Calculate the square root of the sum of squared word frequencies.

Example: Comparing Sentence 1 and Sentence 2

-

Dot product of vectors =

(1 * 1) + (1 * 0) + (1 * 0) + (0 * 1) + (0 * 1) + (0 * 0) + (0 * 0) + (0 * 0) = 1 -

Product of magnitudes =

√(1² + 1² + 1² + 0² + 0² + 0² + 0² + 0²) * √(1² + 0² + 0² + 1² + 1² + 0² + 0² + 0²) = √3 * √2 -

Cosine Similarity =

1 / (√3 * √2)

Repeat this process for all pairs of sentences to find their similarity scores. A higher score means greater similarity.

Why Use Cosine Similarity?

Cosine Similarity is effective because: - It ignores differences in sentence length, focusing only on word usage. - It provides a score between -1 and 1: - 1: Identical content - 0: No similarity - -1: Completely opposite in word usage.

Check It Out!

Explore the full project on GitHub to see the implementation in action.

Learn more in depth with Wikipedia Article